VALUING, SIZING AND SOURCING THE HIGH-TECH PRODUCT

Evaluation is the process of making the case for high tech decisions based on the benefits and costs associated with a project or product features. Assessing the value and cost of features for development is considered either a simple problem of ascribing value-for-money, or an obscure process, part inspiration part politics where decisions are made behind closed doors.

INTRODUCTION

How do we evaluate high-tech objects and the objectives for systems development projects? Organizations desire to control and direct their destinies. Organizational technology strategies need therefore the support of tools and methods as aides for making investment decisions (Powell, 1992). We need to be able to answer the following questions:

- How should we go about evaluating high tech use and investment decisions?

- How useful are the various approaches and what if anything do they ignore.

- When we select and value high tech features and product how do we go about making those decisions?

- What different approaches are available to help us evaluate choices between different products, services, features, and suppliers?

Evaluation is the process by which we decide whether or not to purchase or commit ourselves to something. Evaluation activities are by definition decisive periods in the life of any high tech project. Evaluation is often considered to be made on overwhelmingly rational, economic criteria, however it may also be an emotional, impulsive or political decision (Bannister and Remenyi, 2000). This is a plethora of tools available to make optimal financial decisions based on the premise that significant aspects of the system can be monetized. But there are also tools that help us reveal unquantifiable aspects and soft factors, to facilitate the formation of qualitative decisions.

UNDERSTANDING EVALUATION

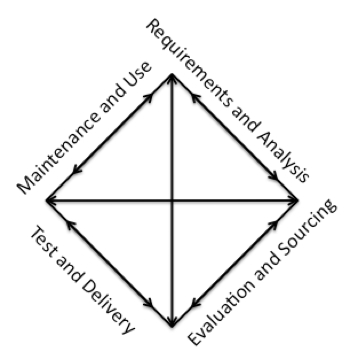

All decisions arise from a process of evaluation, either explicit or implied. The process of valuing an option by balancing its benefits against its costs. Furthermore decisions arise throughout the development life cycle as and when options are identified. Formally the SDLC describes evaluation as a separate phase and activity, practically however evaluation takes place continuously, albeit with a shift in frequency, formality or emphasis.

Having gathered user requirements by looking at and observing behaviour in the field how do we analyze, judge and identify significant patterns or benefits for inclusion in new developments?

VALUE AND COST

When a high tech investment delivers value in the form of payouts over time, financial tools like ROI and NPV can be used as aides for the decision making process; to invest or not to invest. Improved financial performance is an important criteria for judging an IT funding opportunity. Payouts may take the form of estimable cost savings or additional periodic revenue. While financial performance measures are not always assessable or relevant to all investment decisions or project commitments, they should be created (and their assumptions made explicit) wherever possible as one of a range of inputs into the decision making process.

Financial measures are often the easiest to create and maintain for organizational decision-making as they readily incorporate different assumptions as factors change.

ROI

The return on investment (ROI) is simply the ratio of an investment payout divided by the initial investment. The ROI represents the interest rate over the period considered.

Payback Period

Another metric for evaluating investment decisions is the Payback period. The Payback period is the time taken for an investment to be repaid, i.e. the investment divided by the revenue for each period.

Net Present Value

The Net Present Value (NPV) method takes account of interest rates (or the cost of money) in the investment model. The Present Value (or cost) of an investment is the difference between the investment and the present value of any future net revenues or savings for the period ‘n’ discounted by the interest rate ‘i’. The Net Present Value (NPV) calculation summates the value of an investment decision in terms of the present value of all its future returns.

As with Payback there is usually a simple break-even point for any particular interest rate beyond which future values (payments or annuities) result in a net positive return. NPV is a good way of differentiating between investment alternatives however the assumptions built into the model should be made explicit. For example: payouts do not always occur at the end of the period, interest rates may change, inflation may need to be considered, payments may not materialize or they may be greater than expected.

Internal Rate of Return

Having calculated the NPV of the investment from compounded monthly cash flows for a particular interest rate it becomes evident that there may be an interest rate at which the NPV of the investment model becomes zero. This is known as the Internal rate of return (IRR). The IRR is a calculation of the effective interest rate at which the present value of an investment’s costs equals the present value of all the investment’s anticipated returns.

The IRR can be used to calculate both a cut-off interest figure for determining whether or not to proceed with a particular investment (a threshold rate or break-even point) and the effective payout (NPV) at a particular interest rate. An organization may define an internal cost of capital figure (a hurdle rate) that is higher than market money rates. If the IRR of a project is projected to be below the hurdle rate then it may be rejected in favour of another project with a higher IRR.

Discussion on financial rationality

Investment decisions imply the application of money, but also time, resources, attention, and effort to address opportunities and challenges in the operating environment. An often unexpected consequence of acting on the basis of a rational decision making process is that action alters the tableaux of factors which in turn reveal new opportunities (or challenges) that must needs alter the bases on which earlier decisions were made. Some strategic technology decisions appear obvious; an organization needs a website, an email system, electronic invoicing, accounts and banking. Individual workers need computers, email, phones, shared calendars, file storage etc. This is because technology systems have expanded to constitute the basic operational infrastructure of the modern organization and (inter)networked citizen. How should we therefore characterize the dimensions along which high tech systems are evaluated? High tech investment decisions have been classified into two distinct dimensions: technology scope, and IT strategic objectives (Ross and Beath, 2002). Combining these two dimensions Ross and Beath the identified areas of application for organizations’ high tech investment decisions (Figure below). Technology scope includes categories such as shared infrastructure (with global systemic impact) through to stand-alone business solutions and specialized applications impacting single departments and operational divisions. IT strategic objectives differ in terms of horizon than scope; from long-term transformational growth (or survival) to short-term profitability and incremental gain. Both scope and strategic dimensions highlight the organizational dependence on high tech systems and both suggest that purely financial justifications are not always practical or desirable.

Investment in shared infrastructure illustrates the case; physical implementation and deployment costs of new IT and hardware may be quantifiable, but broader diffuse costs and benefits arise through less intangible aspects such as lost or gained productivity, new opportunities and improved capabilities.

JUSTIFYING DECISIONS

Evaluation is a decision making process. How do we decide what systems to use (develop or acquire, install and operate) in our organizations? We think of the process of making a decision as the process of evaluation. There are two contrasting dimensions to evaluation processes both of which need to be considered if evaluations and the decisions that result are to deliver their anticipated benefits: quantitative methods - often financial models, and subjective methods addressing intangible and non-financial aspects.

Each decision is in a real sense an investment decision for the organization. Identifying who fills what role in the decision making process is a prerequisite and each actor then draws on various methods and tools to make their case. However the decision maker cannot rely on pure fiat or role-power to arrive at the best decision while at the same time achieving consensus and buy-in. Decision makers engage in convincing behaviour drawing on a mix of objective and subjective resources as evidence supporting their decision.

The justification of projects across the range of IT investment types must-needs differ as the cost and benefits differ in terms of quantifiability, attainability, size, scale, risk and payout. We should therefore have a palette of tools to aide evaluation and decision-making. Ross and Beath (2002) make the case for

“a deliberate rationale that says success comes from using multiple approaches to justifying IT investments.” Powell (1992) presents a classification of the range of evaluation methods . Evaluation methods are broadly: objective or subjective. Objective methods are quantifiable, monetized, parameterized, aggregates etc. Subjective methods are non-quantitative, attitudinal, empirical, anecdotal, case or problem based. Quantitative methods include financial instruments and rule based approach. Multi-criteria and Decision Support System approaches cover cybernetic or AI type systems that use advanced heuristics or rule systems to arrive at recommendations. Simulations are parameterized system models that can be used to assess different scenarios based on varying initial conditions and events.

Table: Evaluation methods. Adapted from Zakierski's classification of evaluation methods (Powell, 1992)

It is noteworthy however that many of these evaluation methods are in fact hybrid approaches, incorporating both subjective and objective inputs and criteria e.g. Value analysis (Keen, 1981).

In accountancy, measurement and evaluation are considered to be separate, involving different techniques and processes. Furthermore the evaluation process is expected to balance both quantitative and qualitative inputs. Banister and Remenyi (2000) argue the evidence suggests that high tech evaluations and investment decisions are made rationally, but not formulaically. This is in part because what can be measured is limited and processes of evaluation involves the issue of ‘value’ more generally, not simply in monetary terms. Investment decisions must involve, they argue, the synthesis of both conscious and unconscious factors.

“To be successful management decision making requires a least rationality plus instinct.” (Bannister and Remenyi, 2000)

In practice, decision making is strongly subjective, while grounded in evidence it also requires wisdom and judgement, an ability that decision makers acquire over time and in actual situations through experience, techniques, empathy with users, deep knowledge of the market, desires and politics. A crucial stage of the decision making process is the process of problem formation and articulation, both of which reducing problems to their core elements and interpretation “the methods of interpretation of data which use non-structured approaches to both understanding and decision making.” (Bannister and Remenyi, 2000) ‘Hermeneutic application’ as they describe it is the process of translating perceived value into a decision that addresses a real problem or investment opportunity. It is necessary because the issue of ‘value’ often remains undetermined, it may be (variously): price, effectiveness, satisfaction, market share, use, usability, efficiency, economic performance, productivity, speed, throughput, etc.

DICUSSION

When should we consciously employ an evaluation approach? Evaluations are made implicitly or explicitly any time we reach a decision point. Recognizing and identifying the decision point may appear obvious but is in fact often unclear at the time. Evaluation and decisions are made whenever an unexpected problem is encountered, if further resources are required to explore emerging areas of uncertainty, if another feature is identified or becomes a ‘must have.’

Evaluation methods may be categorized further as ex-ante versus ex-post. Ex-ante methods are aides to determining project viability before the project has commenced; they are exploratory forecasting tools and their outputs are therefore speculative. Ex-post methods are summative/evaluative approaches to assessing end results; they are therefore of limited value for early stage project viability assessment.

Systems development life cycles bring high tech product project decisions and therefore evaluation into focus in different ways. Stage-gate models concentrate decision making at each stage-gate transition. Agile models explicate decision-making by formalizing the responsibilities of different roles on the project and their interactions on an on-going basis. Both extremes aim to highlight the following: decision points, the person responsible for asking for (and therefore estimating) resources, and the person responsible for stating and clarifying what is needed (scope and requirements). Indeed, formalizing the separation of role ownership (between requirements, estimation) and responsibilities (between value and delivery) is one of the key benefits of any life cycle.

Is the decision already made for us? As the high tech and IT sectors mature so too do we see the gradual stabilization of software, services and devices that constitute the assemblage of tools and systems of our modern internetworked lives. Nicholas Carr (2005) predicts that we are witnessing the inevitable shift of computing, from an organizational resource (and competence) into a background infrastructure. Several factors are driving the dynamic. Scale efficiency of development; specialist teams best develop complex feature rich usable systems. Scale efficiency of delivery; global service uptime, latency and storage performance is best delivered by organizations with global presence and specialist competencies in server farms, grid and cloud computing. The green agenda reaps savings by shifting computer power consumption from relatively inefficient desktops and office servers into energy efficient data centres. Carr’s point is that general purpose computing is gradually shifting towards a ‘utility’ model therefore the era of corporate computing is effectively dead. The consequence is that the commoditization of software and services, things that are currently thought of as ‘in-house’ offerings like: email, file storage, messaging, processing power etc. The implication of this trend is to change the way we view high tech and IT projects, their evaluation and delivery to our organizations. The decision is no longer one of build versus buy (run and operate) but ‘rent.’

CONCLUSIONS

Requirements and evaluation are crucial activities in the overall process, with the decisive moment surrounding evaluation – valuing and costing product features and projects. But the work of systems development presents complex issues. There are inevitable intrinsic inequalities and asymmetries between the actors involved: product owners, developers, users, customers, organizations, business, and other groups. Interaction is often characterized by processes of persuading others; persuading and convincing those involved in producing, consuming and managing the development process.

REFERENCES